Introduction

Enterprises are rapidly exploring how to integrate Generative AI (GenAI) into core operations, with large language models (LLMs) at the center of this shift. One area gaining traction is using LLMs to make data more accessible to non-technical users.

Business users often need on-demand access to data for tasks like tracking marketing campaign performance, analyzing sales by region or customer segment, or monitoring operational KPIs. Enabling them allows enterprises to make faster, more informed decisions without relying on IT or data teams. This democratization of data accelerates productivity, enhances operational efficiency, and enables personalized customer experiences. By empowering users across departments to explore and analyze data independently, organizations foster collaboration, improve transparency, and build a truly data-driven culture.

There are off-the-shelf solutions that convert natural language into executable queries (Databricks AI/BI Genie or Snowflake Cortex). Enterprises can also choose to build custom text-to-SQL solutions.

Both help reduce that dependency by allowing users to ask data questions directly in natural language.

To be effective, these systems must go beyond language understanding:

- Data context: Interpret metadata, table relationships, and naming conventions.

- Query accuracy: Generate valid SQL that reflects user intent and executes correctly.

- Performance: Ensure queries are efficient and scalable across large datasets.

While text-to-SQL solutions require effort to incorporate some of these features, enterprises still choose them owing to control and transparency around key aspects such as governance of the LLMs, data transfer, security, and privacy concerns.

When building these custom solutions, we aim to understand the impact of data issues on them.

Business leaders recognize that poor data quality – missing values, inconsistent formats, and outdated dictionaries – can undermine any analytics initiative. The key question: Can LLMs generate useful, accurate SQL when the underlying data is messy or poorly documented? Or does the “Garbage In, Garbage Out” principle hold, even for state-of-the-art GenAI?

We investigate whether large language models (LLMs) can still generate useful, accurate SQL queries when the underlying data lacks structure or documentation. In other words, can LLMs infer enough context from imperfect data to support effective self-service analytics?

Methodology

To investigate whether garbage in really does entail garbage out or if we can engineer our way into an efficient SQL solution with properly trained LLMs, we evaluated three scenarios, each representing a different level of data readiness:

- Bronze (Raw, minimally processed data): Poor metadata, little to no documentation.

- Silver (Cleansed, validated data): Improved structure and metadata.

- Gold (Curated, analysis-ready data): Optimized for AI and BI, with rich context.

For each scenario, we assessed:

- Data awareness: How much schema/business context is provided to the LLM.

- Prompt engineering: Use of few-shot examples and business logic.

- Latency: Time to generate and execute queries.

- Accuracy: Percentage of correct, business-intent-aligned SQL queries.

To facilitate this comparison, we designed and implemented a custom architecture that served as our baseline. This architecture provided the flexibility to control the amount of information supplied to the LLM. In the following sections, we will outline the key components of our architecture.

Architecture

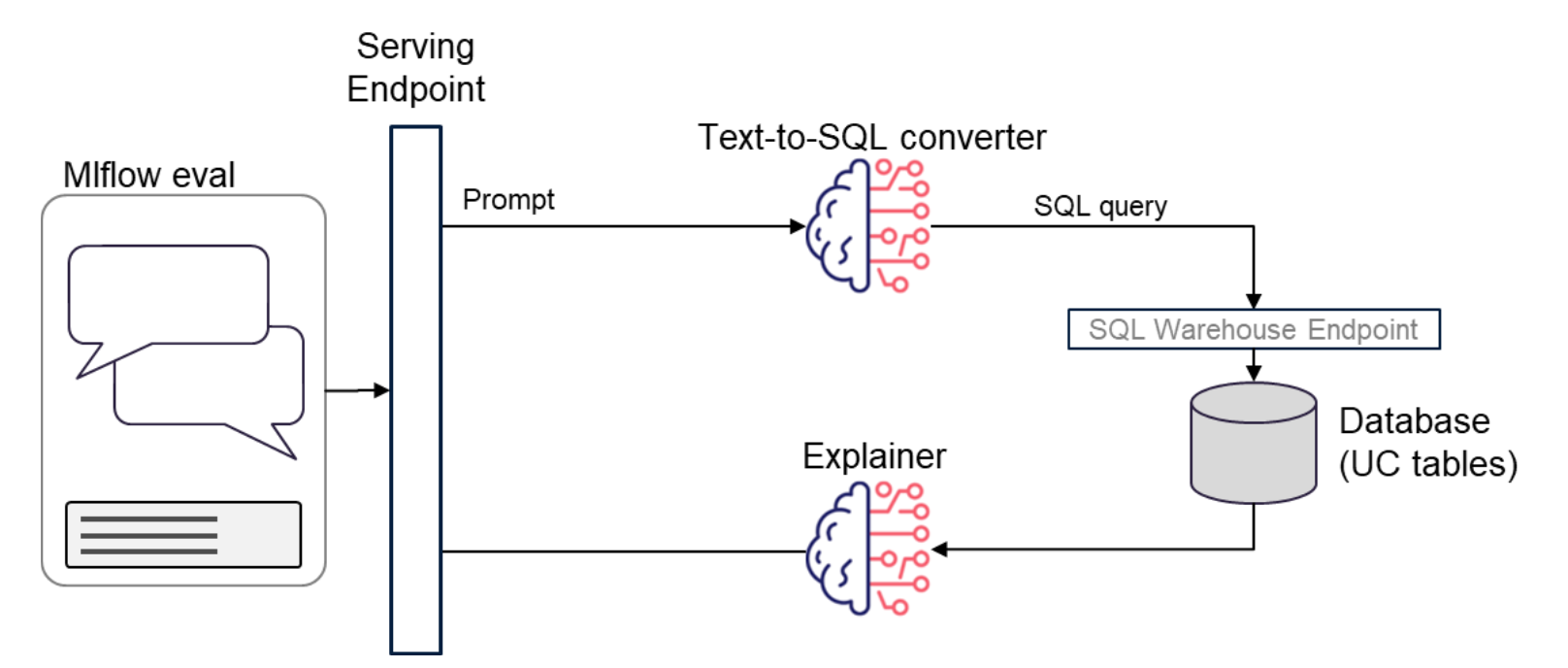

Our architecture integrates several components that collectively enhance the overall workflow. The process begins with a user query, focusing on the Text-to-SQL conversion phase. The generated SQL statements are executed against a database, serving as the primary performance metric.

Since SQL queries typically return tabular numerical results, we incorporated an additional LLM to contextualize and explain the output. This “Explainer” model takes the initial user query along with the SQL results and generates a meaningful response. The final output is delivered to the user interface (UI) via a Databricks Serving Endpoint. To evaluate the output, we use MLFlow.eval

Test Scenarios

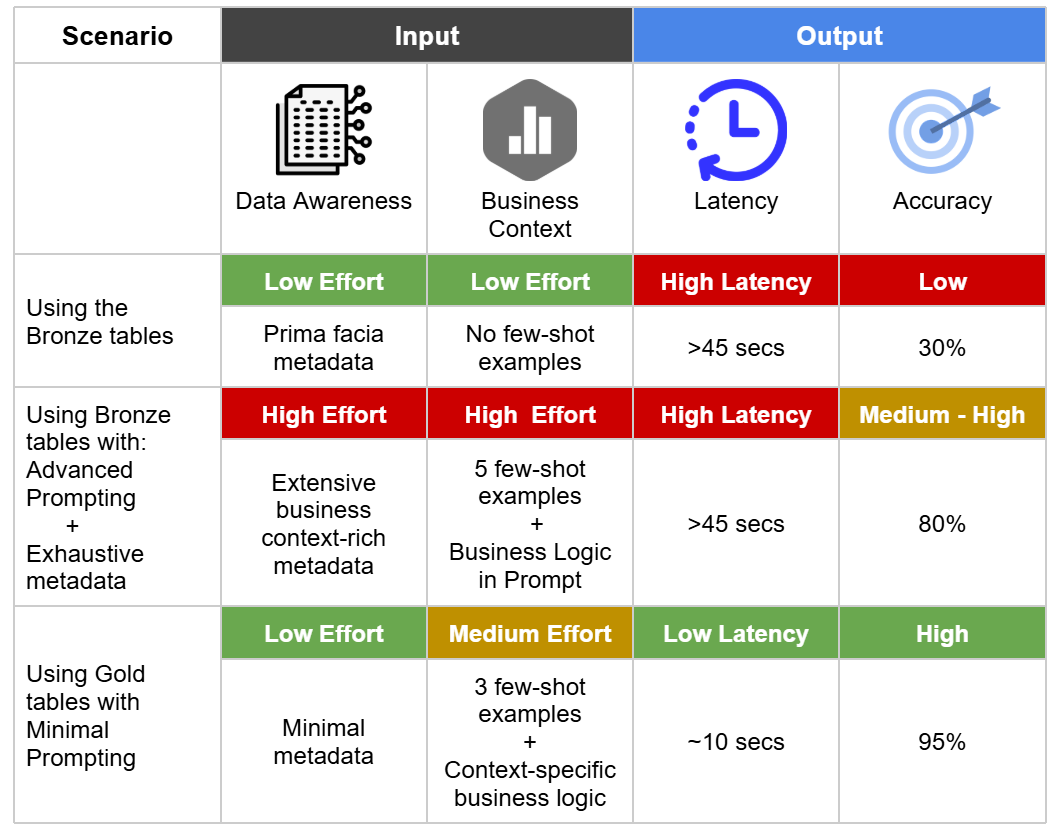

We iterate through the multiple stages, delineated into three key scenarios:

- Using Bronze Tables

- Using Bronze Tables with Advanced Prompting

- Using Gold Layer Tables with minimal prompting: Leveraging streamlined/ engineered tables off of the streaming datasets

Each scenario varied the input quality to study the impact on the output, i.e., the generated SQL statement.

The first scenario uses raw bronze tables with minimal data awareness and business context. While this requires little upfront effort, it results in high latency and low accuracy, making it a poor choice for production use.

The second approach still relies on bronze tables but adds advanced prompting. When only prima facie metadata is used with no few-shot examples, accuracy remains low despite the high effort. However, when the prompt includes rich metadata, few-shot examples, and embedded business logic, accuracy improves significantly to 80%, though latency remains high (over 45 seconds).

The final and most efficient setup uses well-structured gold tables with minimal prompting. By leveraging curated data and embedding only light business logic in the prompt, this approach achieves high accuracy (95%) with low latency (~10 seconds) and reduced engineering effort.

Key Takeaways

- Data quality is king: Even the most advanced LLMs cannot fully compensate for missing or inconsistent metadata. Clean, curated data (gold layer) is essential for reliable, high-accuracy Text-to-SQL solutions.

- Prompt engineering helps, but has limits: More examples and richer context improve performance, but add latency and complexity. There’s a point of diminishing returns if the underlying data remains poor.

- Semantic layers and ontologies matter: Solutions that enrich schema context, whether via semantic models, ontologies, or curated dictionaries, consistently outperform those relying on raw data alone.

- Performance trade-offs are real: Efforts to boost accuracy (via prompt engineering or metadata enrichment) can increase latency and operational complexity. Striking the right balance is crucial for production use.

“Garbage In, Garbage Out” still applies, even in the GenAI era. LLMs can abstract some complexity, but they are not a substitute for clean, well-structured data.

Generative AI is transforming how businesses innovate, automate, and create value. Whether you’re exploring use cases or scaling production-ready solutions, our GenAI consultants are here to guide you every step of the way. Reach out to our experts to learn how we can help you design, implement, and optimize GenAI solutions tailored to your unique needs. Let’s build the future—together.