AI Agents are rapidly becoming the organizing principle for how enterprises build and scale generative AI solutions. These agents are no longer simple chat interfaces. They are structured, multi-component systems capable of reasoning, taking action, and integrating with tools and data to complete tasks. The rise of agentic workloads marks a shift in how organizations think about deploying GenAI, moving beyond isolated prompts and toward modular, autonomous systems designed for continuous interaction and improvement.

While early adoption of generative AI relied on handcrafted prompting and experimentation, that approach is no longer sufficient. Manually designed prompts are brittle, difficult to evaluate, and tied closely to the behavior of specific models. As AI agents take on more complex responsibilities within business workflows, the need for structure, evaluation, and governance becomes central to their success.

At Lovelytics, we have moved past the unpredictable world of prompt engineering. Our deep experience as an elite Databricks partner has led us to a more robust, Evaluation-led GenAI Development methodology. This approach, which combines the structured programming of DSPy with the robust tracking and observability of MLflow 3.0, is the key to building the trustworthy, production-grade systems our clients demand.

This blog outlines a modern engineering approach to building AI agents, grounded in recent science and enabled by technologies such as DSPy, MLflow 3.0, and Agent Bricks on the Databricks platform. We explore how modular prompt programming, continuous evaluation, and platform-level observability combine to support scalable agentic workloads. Along the way, we highlight how Lovelytics applies these principles to design GenAI systems that are resilient, measurable, and ready for enterprise deployment.

This blog was inspired by the foundational insights in the Prompting Science Reports by Lennart Meincke, Ethan Mollick, Lilach Mollick, and Dan Shapiro, whose work has helped frame a more systematic understanding of prompting in enterprise AI.

What Prompting Research Reveals

Recent research has brought critical insights into the limitations of prompt engineering as it is commonly practiced. Three studies, published in the Prompting Science Reports by Lennart Meincke, Ethan Mollick, Lilach Mollick, and Dan Shapiro, in particular, have examined how small changes in prompt structure, strategy, or incentive framing can significantly affect the output of even the most advanced language models. These findings challenge the idea that prompting is a stable or easily generalizable technique.

The first study, titled “Prompt Engineering is Complicated and Contingent,” found that seemingly minor prompt variations can yield dramatically different results across tasks. What works well in one scenario may underperform or even fail entirely in another. This level of unpredictability undermines efforts to standardize or scale GenAI applications.

A second study, “The Decreasing Value of Chain of Thought in Prompting,” questioned the effectiveness of reasoning-based prompting strategies. While chain-of-thought prompting can provide gains for some models and tasks, the research shows diminishing returns in more capable models. Moreover, it introduces higher latency and cost due to increased token usage, without delivering consistent improvements in accuracy.

The third study, “I’ll Pay You or I’ll Kill You — But Will You Care?” explored the impact of incentive framing on model performance. Unlike human behavior, language models tend to respond more favorably to reward framing than to punitive language. However, the overall sensitivity to incentives remains limited, which further highlights the disconnect between human reasoning and model behavior.

Taken together, these studies suggest that prompt engineering is not only difficult, but also inherently unstable. As the scale and complexity of AI agents increase, organizations require more dependable, repeatable ways to generate and optimize prompt behavior. This has led to a shift toward prompt programming, an engineering discipline that treats prompts as structured, versioned, and evaluable artifacts.

Toward Reliable Prompting Systems

As AI Agents and generative AI systems become more integral to enterprise operations, the shortcomings of hand-written prompts become increasingly difficult to ignore. Manually authored prompts are difficult to version, sensitive to the specific model they were created for, and not easily transferable across use cases. Small changes in model architecture, deployment environment, or business requirements can cause prompt performance to degrade in unpredictable ways.

One of the most significant challenges is that prompts are often tightly coupled to the language model for which they were designed. When organizations switch models due to cost, latency, licensing, or infrastructure requirements, the original prompts may no longer yield acceptable results. In some cases, they may fail to produce accurate or even usable outputs. This tight coupling introduces fragility that is incompatible with the flexibility required in production systems.

Another concern is maintainability. As AI workloads evolve, teams often need to update prompts to reflect changes in business logic, compliance rules, or user behavior. Without a versioned, structured approach, these updates introduce risks and inconsistencies. Hand-tuned prompts can quickly become a tangle of brittle logic that is difficult to debug or extend.

Structured prompt optimization offers a solution. Rather than writing static text strings, developers define high-level task logic that can be compiled into prompts programmatically. This approach allows for the systematic tuning of prompt components, example selection, and output structure based on empirical performance. It moves the development process away from intuition and toward data-driven optimization.

DSPy is one such framework that enables this shift. It provides a declarative interface for defining task signatures, modular components for common prompting patterns, and built-in optimizers that search for the best-performing configurations. This design allows developers to abstract away the specifics of prompt text while maintaining full control over behavior and evaluation.

Databricks enhances this capability by offering the infrastructure to scale and monitor these workflows. Agent Bricks, for example, enables teams to define GenAI agents using low-code interfaces while incorporating built-in evaluation and optimization loops. It simplifies the deployment and refinement of GenAI applications without requiring repeated manual adjustments. Together, DSPy and Databricks offer a structured, scalable path to prompt optimization that meets the demands of enterprise systems.

At Lovelytics, we have actively studied and embraced these techniques as part of our Evaluation-led GenAI Development methodology. By combining frameworks like DSPy with the power of Databricks, we help organizations build AI Agents that are reliable, measurable, and ready for scale.

Structured Prompt Optimization Using DSPy

As AI Agents scale in production, the fragility of traditional prompt engineering becomes apparent as manual prompts introduce complexity, hinder iteration, and often fail under change. DSPy addresses these limitations by treating prompt development as a modular, programmable process. In parallel, the emerging discipline of context engineering focuses on deliberately structuring the inputs, retrievals, and auxiliary signals that shape an agent’s reasoning and behavior, ensuring more robust and reliable system performance.

At the core of DSPy is the concept of signatures. A signature defines the input and output structure for a task, such as mapping a question to an answer or a query to a summary. These semantic declarations serve as blueprints for modules, which encapsulate reusable logic for specific types of interactions with a language model.

Modules in DSPy go beyond static prompting. Examples include ChainOfThought, which structures responses through intermediate reasoning steps, ReAct, which combines reasoning with external tool use to simulate more complex decision-making, and Predict that enables language models to make predictions by leveraging structured prompting and optimization. It uses a predictor class to generate outputs based on input data and a defined signature (input-output format).

To further support performance, DSPy includes a suite of optimizers that automatically search for more effective prompt instructions and examples. Notable among them is SIMBA, which refines prompt programs using mini-batch optimization over evaluation datasets. Other optimizers, such as BootstrapFewShot and MIPROv2, focus on example selection and instruction tuning based on empirical feedback.

DSPy not only supports prompt modularization, but also facilitates effective context engineering. The ability to declaratively compose retrieval steps, auxiliary functions, and intermediate representations is essential for building robust, multi-step agents. This structured approach minimizes the fragility of traditional prompting and provides the scaffolding necessary for agent-level orchestration.

DSPy is well-suited for execution on the Databricks platform. Developers can define, compile, and run DSPy programs within notebooks or jobs. Integration with MLflow 3.0 allows for automatic logging of prompt versions, evaluation metrics, and runtime traces. Unity Catalog provides governance over models, evaluation datasets, and prompt artifacts, ensuring enterprise compliance and auditability.

By combining declarative prompt logic, modular design, and automated optimization, DSPy enables a transition from ad hoc prompt engineering to repeatable, maintainable GenAI programming. When deployed on Databricks, it becomes part of a complete system for developing, evaluating, and operationalizing generative AI at scale.

Applying DSPy to Enterprise Agentic Workflows

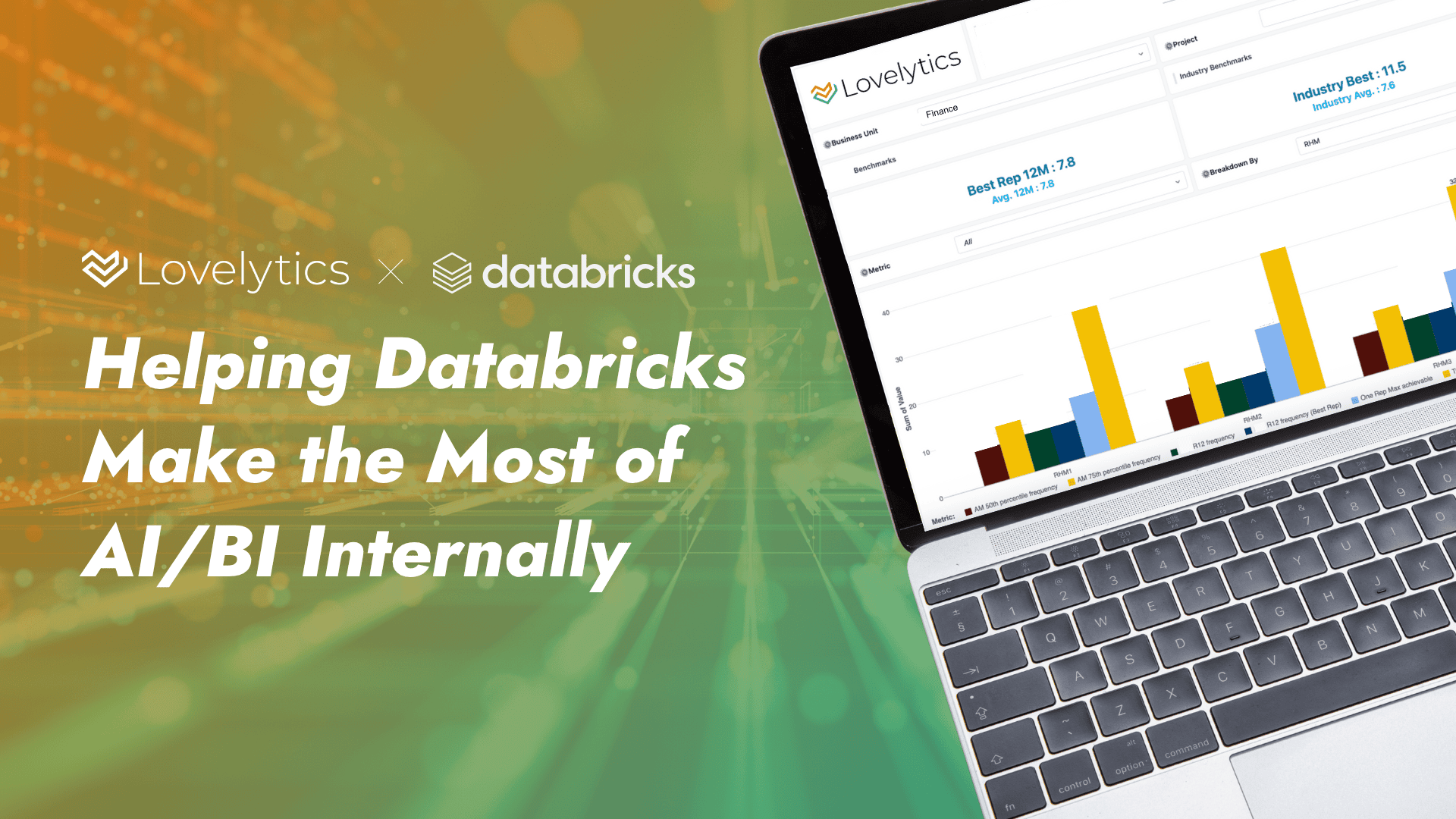

At Lovelytics, we have translated the theoretical advantages of DSPy and evaluation-led GenAI development into measurable business outcomes for enterprise clients. Across a range of industries, our team has deployed GenAI systems and AI agents that benefit from DSPy’s modular design and optimization capabilities, in conjunction with Databricks’ production-grade infrastructure.

In the manufacturing sector, we partnered with a global firm to build a GenAI-powered Customer Service Agent. By using DSPy, we were able to enforce standardized output formats and improve downstream processing through more effective context engineering. This structure proved critical in integrating LLM responses into existing business workflows.

In a related project, we applied DSPy’s optimization modules to a Warranty Early Warning System. The goal was to use AI to proactively surface potential issues based on service and usage patterns. By tuning prompts for accuracy and consistency, we improved the system’s precision in identifying warranty risks before they escalated. Here too, context engineering played a pivotal role by shaping the relevant inputs and guiding the agent’s focus to high-signal information, we were able to significantly boost precision

In the communications and media domain, we collaborated with a leading organization to build a GenAI solution for donor segmentation and persona generation. DSPy played a central role in optimizing prompt structures for context clarity, enabling the generation of nuanced donor profiles that could inform tailored engagement strategies. Through deliberate context engineering, we ensured the persona outputs aligned with stakeholder expectations while maintaining consistency across diverse donor segments.

These projects reflect our broader approach to building AI agents that are not only functional but also trustworthy, scalable, and production-ready. They also underscore our belief that evaluation-led GenAI development, powered by tools like DSPy and MLflow, is essential to delivering sustained business value. As context engineering becomes the new foundation for agentic development, we continue to embed structure, observability, and modularity into every solution we deploy.

Observability and Evaluation in Agentic Systems

Effective engineering requires measurement. Without the ability to quantify model behavior, organizations cannot validate accuracy, compare approaches, or identify regressions over time. This principle is especially critical in AI Agents, where outputs are open-ended and traditional evaluation metrics do not always apply. Despite its importance, structured evaluation is often overlooked or bolted on late in the development cycle.

MLflow 3.0 introduces a comprehensive framework for GenAI observability, designed to address this gap. It enables prompt versioning, structured evaluation, and runtime tracing across both development and production environments. With native support for generative model use cases, MLflow 3.0 provides the tools necessary to build evaluation into the core of the GenAI development process.

At the heart of this capability is the evaluate API, which supports a wide range of metrics including correctness, groundedness, fluency, latency, and token usage. Developers can use out-of-the-box LLM-based judges or define custom evaluators tailored to specific business requirements. These evaluations are logged automatically and associated with prompt versions, input data, and model configurations.

MLflow also supports human-in-the-loop evaluation through the Review App, allowing subject matter experts to score model outputs and provide structured feedback. These labels can be stored as Delta Lake tables, versioned through Unity Catalog, and reused to drive optimization loops or fine-tuning processes.

Tracing is another core component of MLflow 3.0. It captures granular metadata during prompt execution, including token counts, response latency, intermediate reasoning steps, and tool usage. This data provides critical insights into system behavior, enabling developers to debug, compare variants, and optimize performance over time.

With Databricks and tools like DSPy and MLFlow 3.0, context engineering becomes a measurable and tunable practice, not just a one-off design decisionBy integrating evaluation, tracing, and governance, MLflow 3.0 transforms prompt engineering from an informal design activity into a measurable, repeatable engineering discipline. When combined with DSPy and executed on Databricks, it enables full-lifecycle GenAI and Agent development with clear visibility into quality, cost, and performance at every stage.

Iterative Refinement in Agentic Workflows

Scaling AI Agents from prototypes to production requires more than technical experimentation. It demands a disciplined, closed-loop development process that brings together design, execution, evaluation, and continuous refinement. This approach ensures that GenAI systems not only perform well initially but continue to evolve based on measurable feedback and changing requirements.

DSPy provides the foundation for programmatic development through declarative task logic, reusable modules, and automated prompt optimization. MLflow 3.0 complements this by introducing structured tracking, evaluation, and runtime observability. Together, they allow teams to define, version, and measure GenAI workflows with the same rigor expected of enterprise software systems.

Databricks brings this loop full circle by enabling execution and monitoring at scale. Through seamless integration with Unity Catalog and Delta Lake, teams can maintain full lineage and governance over data, prompts, models, and evaluation sets. AI Agents developed on Databricks can be deployed across environments with traceability and compliance.

Agent Bricks extends these capabilities by offering a low-code interface for developing and optimizing LLM-based agents. It integrates tools, memory, and retrieval systems into configurable workflows. A key differentiator is its use of Agent Learning from Human Feedback, which allows subject matter experts to provide natural language feedback that is automatically converted into technical refinements. This eliminates the need for manual tuning and accelerates iteration.

Agent Bricks also supports the generation of domain-specific synthetic benchmarks. This enables teams to evaluate system performance across realistic and diverse scenarios. It presents tradeoffs between quality and cost, allowing organizations to make informed decisions about optimization strategies.

When these components are combined—DSPy for structured logic, MLflow 3.0 for evaluation, and Agent Bricks for feedback and refinement—they form a robust and repeatable loop for AI Agent development. Databricks provides a unified platform where this workflow can be built, scaled, and governed. The result is a system that is not only flexible and efficient but also transparent, reliable, and continually improving.

Databricks as the Ideal Platform for Agentic AI

Enterprise adoption of AI Agents requires more than functional prototypes. It requires a production-ready foundation that supports structured development, automated evaluation, secure deployment, and ongoing optimization. Databricks provides this foundation through a tightly integrated platform that combines model orchestration, prompt versioning, agent development, and governance.

MLflow 3.0 serves as the core for observability and experimentation. It offers native support for generative AI use cases, including prompt versioning, evaluation metrics, and runtime tracing. Teams can track model behavior across environments, associate evaluation results with specific inputs and configurations, and capture meaningful insights for decision-making. These capabilities are critical to building reproducible and auditable GenAI systems at scale.

Unity Catalog supports governance and compliance by managing access, lineage, and ownership of all data, models, prompts, and evaluation assets. It enables secure collaboration across departments and ensures consistency throughout the development lifecycle.

Databricks also provides first-party hosting of advanced foundation models, such as models from Llama, Anthropic, Google and other providers, which can be deployed directly into GenAI workflows. These models can be integrated programmatically through DSPy or operationalized as agents using the Mosaic AI Agent Framework. For external models, Databricks provides the AI Gateway, a centralized platform that streamlines the management, governance, and secure access to generative AI models and their endpoints, integrating both Databricks-hosted and external LLMs like OpenAI through a unified API.

Agent Bricks simplifies the deployment and refinement of agents through a low-code interface. A central capability of Agent Bricks is Agent Learning from Human Feedback. This feature allows subject matter experts to provide natural language feedback that is transformed into targeted adjustments to prompt logic, retrieval steps, or configuration parameters. This feedback loop reduces manual rework and enables agents to adapt quickly to domain-specific requirements.

Agent Evaluation, integrated into MLflow, supports automated scoring using LLM-based judges, domain-guided evaluation criteria, and expert-generated labels. The Review App makes it easy for teams to annotate outputs and build curated evaluation datasets. These datasets power performance monitoring across both development and production environments.

Together, DSPy, MLflow 3.0, Agent Bricks, and the Mosaic AI Agent Framework form a complete ecosystem for building, optimizing, and governing generative AI and AI Agent systems. Databricks offers the infrastructure, integrations, and scale to support this ecosystem, making it the ideal platform for organizations seeking to operationalize GenAI with confidence and clarity.

At Lovelytics, we bring deep expertise in this ecosystem, helping enterprises navigate the end-to-end journey of agent development, evaluation, and deployment on Databricks.

Conclusion

As AI Agents move from innovation to implementation, the path forward requires a shift from trial-and-error prompting toward structured, evaluable, and scalable systems. Research has shown that prompt effectiveness is highly variable and context-dependent, making manual methods unreliable in production. At the same time, the complexity of enterprise environments demands tools that enable version control, governance, continuous evaluation, and adaptability.

The approach outlined in this blog reflects that reality. With DSPy, prompt logic becomes modular and declarative. With MLflow 3.0, evaluation becomes measurable and repeatable. With Agent Bricks and the Mosaic AI Agent Framework, optimization becomes systematic and user-informed. Databricks provides the cohesive platform that brings all of these components together—backed by secure infrastructure, native model hosting, and integration with open and proprietary LLMs.

At Lovelytics, the GenAI team has pioneered a methodology known as Evaluation-led GenAI Development. This approach emphasizes the role of measurable, task-specific evaluation in every phase of the development lifecycle. It aligns directly with the technologies and principles explored in this blog. Combined with our Quality Delivery Framework and elite partnership with Databricks, this methodology enables us to deliver production-ready AI Agents that are optimized, governed, and adaptable.

If your organization is looking to go beyond experimentation and into structured, enterprise-scale AI Agents, we invite you to connect with us. Let us show you how our engineering-led approach can accelerate your adoption of GenAI with clarity and confidence.