You Have A 1 in 9.2 Quintillion Chance Of Building The Perfect NCAA Tournament Bracket

“Brackets, Brackets, and more Brackets”

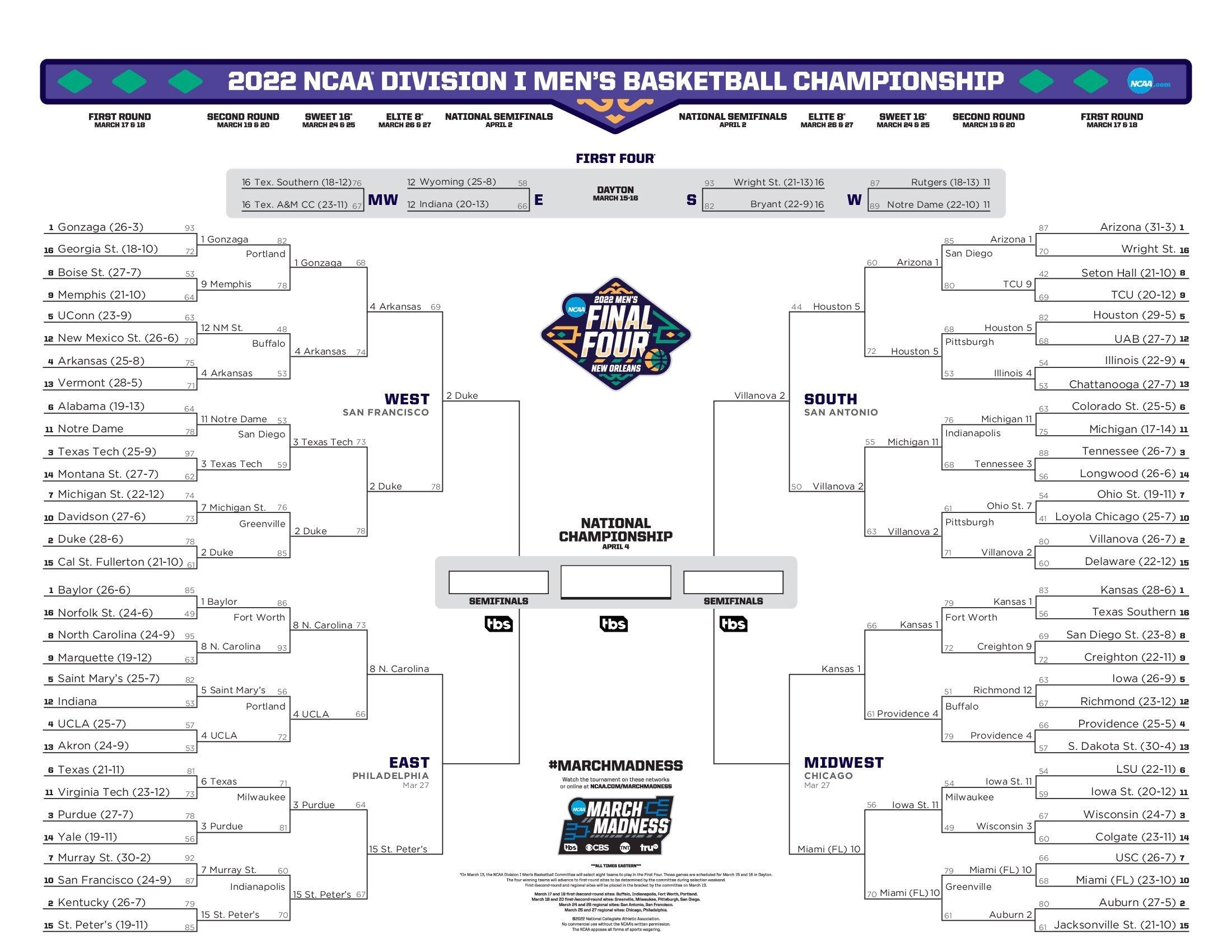

It is that time of the year again. March Madness is here and people around the globe are rushing to fill out tournament brackets with the hope of picking the winning team each and every game and of course, rooting for their favorite team. Like many workplaces, Lovelytics employees get super excited about this event. We are first and foremost data geeks, but we are also basketball fans.

Like all sports fans, we are cheering, booing, celebrating or crying the entire tournament. But as data nerds, we are trying to find clarity in the chaos of the data, asking ourselves, “how can we leverage our knowledge and expertise to gain insights and use data to help us make better bracket decisions and remove the bias that is so prevalent in the bracket selection process each year.” And, is there a way to take this approach and apply it to our client’s business challenges?

Our March Madness challenge: How can we leverage machine learning best practices using Databricks, a lakehouse platform, to create successful bracket predictions?

“The Madness in the Odds”

For context, there are 63 games in an NCAA tournament bracket, and each game has two possible winners. All possible permutations of brackets will be 2^63 ≈ 9.2 quintillions. That is a 9 with 18 zeros. Said another way, the odds of predicting a perfect bracket is 1 in 9.2 quintillions.

How Can We Improve Our Odds? – Deciding What To Predict

With a 64 team tournament bracket, it is challenging to formulate exactly how and what to predict, especially knowing the later brackets are only defined when a team has won their previous game. Therefore we opted to predict the outcome of all possible matchups of the 64 final teams, this is 64*63/2 = 2016 possible matches.

Our approach is to identify each team with a unique numerical id that is the same every year. If a team is new to the tournament (i.e. has never played in the tournament before) they are assigned new ids. So in each one of our 2016 possible matches,there is always a team with a lower numerical id than the other team. From that, we will try to predict if the lower numerical id team wins and identify those matches as a Category 1. If the team with a larger numerical id wins, we assign it a Category 0.

“Best Practices”

Like many modern data engineering approaches, we opted to follow a medallion architecture to tackle our challenge, aiming to provide our project with a cohesive and organized structure by dividing it into clearly identifiable layers.

Bronze Layer:

First step, we need our raw data ingested. This step is considered the “Bronze Layer”. We can divide this step into two phases:

- Loading raw training data

- Loading raw testing data

There are countless resources to pull NCAA historical data from. We obtained most of the previous seasons’ (1985-2020) results and statistics from Kaggle and we updated this data including last year’s results. The current season data serves as our testing data. It was obtained from https://basketball.realgm.com and https://masseyratings.com.

Silver Layer:

Next we proceed to prepare our data to be consumed by the machine learning algorithms. In this step, known as the Silver Layer, we carry out necessary transformations and perform feature engineering (select and create new features, fill out missing values) on the raw training and testing sets in order to turn them into the source of truth for our models.

Gold Layer:

It is now time to build our model(s), commonly known as Gold Layer, where we can define business use cases. We opted to use three classical machine learning algorithms:

- Logistic Regression,

- Random Forest, and

- Gradient Boosted Trees

All three algorithms are part of Databricks’ Spark native machine learning library (MLib). We developed a voting system to combine the predictions of our models.

As a final step, we can feed our test data to the models and obtain our predictions. By following these best practices, it allows us to easily adapt our implementation to other use cases and serve as a good template for many machine learning projects.

“Why Spark and Databricks”

Spark is a multi-language engine for executing data engineering, data science, and machine learning on single-node machines or clusters. As we reference Spark, we also need to reference Databricks, which is the perfect platform for our project.

Databricks provides an optimized implementation of Spark. From the flexibility of ingesting your data from multi-cloud sources, along with a way to combine highly performant data and machine learning pipelines, Databricks has everything we need.

While building out our Silver and Gold Layers, we leveraged Spark on Databricks to carry out tasks in parallel in an optimized and efficient way. Trying to do this using a traditional system would take a long time and would eat up costly resources.

Specifically, we carried out complex transformations during our Silver Layer and performed cross-validation for tuning model parameters during our Gold Layer. Databricks also has Managed MLflow, which allows us to run experiments with any ML library, framework or language, and automatically keeps track of parameters, metrics, code, and models from each experiment.

Additionally, Databricks has a smart API to schedule and automate job executions. We consolidated our full project by creating a job that allows us to easily debug, tune and modify our machine learning pipeline from top to bottom. These features provide a tremendous value for our customers due to the ease of data ingestion and the ability to track results and make real-time adjustments to large-scale machine learning pipelines.

“Watching Our Model”

As I write this blog , we are about to start the Final Four Round of the tournament. Our test bracket is performing at a 94 percentile according to the official NCAA website and we have a 91.6% accuracy so far.

Similar to machine learning models we build for our clients, we know that as things change and new variables come about, our model will need to change and continue to learn from every game, every transaction, every data point. Our models will get smarter, more accurate and be able to improve our decision making as they evolve.

We are looking forward to next year’s bracket, and thanks to Databricks and Spark, we will have a better model and will spend considerably less time and resources predicting who will win it all.

So, what do you think? Is March Madness that crazy after all?

Randy is a data consultant for Lovelytics. To learn more about Randy’s work, our machine learning capabilities, Spark, Databricks or building models to help you predict the outcome of a business challenge, connect with us by email [email protected].